Communicating Via Thought Waves Alone: Q&A With Miguel Nicolelis

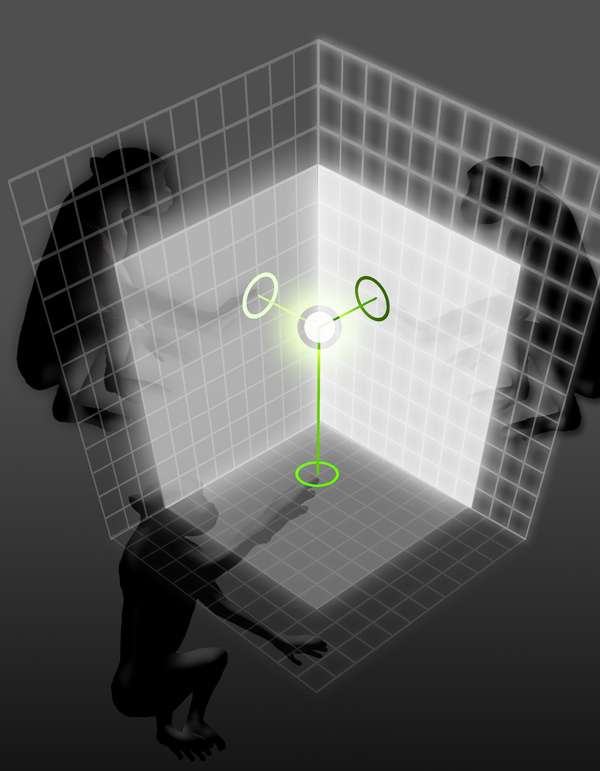

Duke University neuroscientist Miguel Nicolelis, M.D., Ph.D., is famous for his 20-year research on brain-machine interfacing, in which (among other things) EEG sensor caps on disabled patients’ heads let them control cursors on computer screens with the force of their thoughts, their own neurons’ electricity. That research, which first made science news in 2000 with a Nature paper, made global news in 2014, when the paralyzed Juliano Pinto delivered the opening World Cup kick by “thinking” his digital leg into action; by tapping into what Nicolelis calls the “hundred billion electrical brainstorms” of the human brain.

Duke University neuroscientist Miguel Nicolelis, M.D., Ph.D., is famous for his 20-year research on brain-machine interfacing, in which (among other things) EEG sensor caps on disabled patients’ heads let them control cursors on computer screens with the force of their thoughts, their own neurons’ electricity. That research, which first made science news in 2000 with a Nature paper, made global news in 2014, when the paralyzed Juliano Pinto delivered the opening World Cup kick by “thinking” his digital leg into action; by tapping into what Nicolelis calls the “hundred billion electrical brainstorms” of the human brain.

Essentially, sensors on Pinto’s EEG cap read his “electrical brainstorms,” which were fed into a computer, which generated motor commands to his “exoskeleton.”

While perfecting this, Nicolelis’ team moved on. This month they published two papers in Scientific Reports on brain-brain interfaces, or “organic computers,” in which animals whose brains were wired together were able to mentally exchange sensory and motor information to solve problems together—often better than they could alone. One starred four rats with multi-electrode arrays implanted in their primary somatosensory cortices. One starred four rhesus monkeys.

Bioscience Technology talked to Dr. Nicolelis recently about the two new papers:

Q: Why do two studies at once?

A: We usually start testing ideas in rats as it is cheaper, easier, faster. Every concept we created in our lab over 20 years we tested in rats first. It turned out that in this one, the rats worked out very quickly, so we started the monkey. Because of the review process, it turned out the monkey paper got reviewed very quickly, so the journal put them together. But the monkey study is the one that has more practical implications for the future. You are probably aware of brain- machine interfaces. When John Chapin and I created this paradigm in 1999, the idea was to study the brain and produce neuro-prosthetic devices that could help people move again. That has materialized a decade later. But to this day, all the working brain-machine interfaces—which have become very popular—have been done with a single subject operating the interface. So a few years ago I asked myself and my students, “Could more than one subject’s brain collaborate to achieve a common task?” The results are in these two papers.

Q: Were you surprised by any findings?

A: We were very surprised that it could be done, and more easily than thought. If monkeys were proficient in a task task alone, they could readily synchronize their brains to produce a common output, and move an avatar arm in two or three dimensions collaboratively (one would mentally move it up and down, while another would mentally move it side to side, for the reward of juices).

Brain synchronization happens all the time

We were interested in getting multiple animals to synchronize brain activity. It only took visual feedback, a computer screen in front of each animal with the image of the avatar arm and the  reward for completing each trial successfully. The ease with which this worked suggests this kind of synchronization in our brain activity may happen all the time, when we are watching TV in different houses around the world, or in a movie theater. The sound and visual feedback we are getting from the screen may synchronize brains of entire audiences. We may have stumbled on a brain mechanism that explains why audiences react together.

reward for completing each trial successfully. The ease with which this worked suggests this kind of synchronization in our brain activity may happen all the time, when we are watching TV in different houses around the world, or in a movie theater. The sound and visual feedback we are getting from the screen may synchronize brains of entire audiences. We may have stumbled on a brain mechanism that explains why audiences react together.

If you put together brain-brain and brain-machine, in the future multiple users may collaborate in brain-brain-machines, with very important clinical applications.

Q: In a video of one-on-one brain-brain, a rat presses a bar after getting signals from electrodes in his brain attached to the brain of an unseen rat. Was he pretrained?

A: He knew the gist of the task, and that he would get food, but as you saw, he has to react to what he is getting into his brain. He is not getting any external signals. He had no clue at that point that he was not going to get any more visual or tactile stimulation, as he had earlier. He just responded to the electrical stimulation of another rat’s thoughts (which his brain had come to recognize).

In this new paper, in rats, it was even more abstract, because the rats were never pre-exposed to a task, they were just part of a cohort of animals. Somehow the group’s brains worked together and evolved a solution. We didn’t tell them how. The network of brains, the “Brainet,” evolved the solution. By itself.

Q: Can you give us an example?

A: Basically you input the pattern into the Brainet, like the letter D. You want to see if you can retrieve that same pattern later from the collective activity of the brains. So you code the letter into electrical pulses delivered to a couple of the brains, or to all the brains. And a few minutes later you try to recover it, try to reconstruct the letter, and that is what they did.

Q: How was this possible?

Q: How was this possible?

A: By using the electrical activity of the brains that work together. This is pattern recognition. In the last experiment, we delivered inputs to the Brainets, information about temperature going up or down, one beat of information going up or down, and the other of the pressure going up or down. We wired the rats together so the brains collaboratively could mix the information to tell whether it will rain: the weather forecast app. The network of brains did it. Tasks we use to benchmark new computers, we are using to test what brain apps can do.

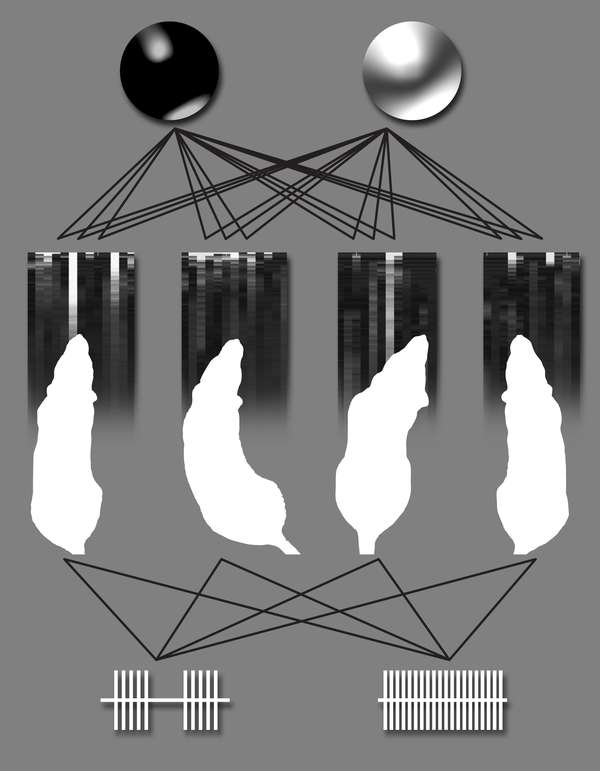

Q: How does it proceed when you want to teach rats the letter D?

A: It’s very simple, the Letter D is a combination of thoughts, right? You have black thoughts and white thoughts in a 2 D space. Each thought that is empty you give a zero. If there is a thought, you give it a one. You make a binary representation of an image, and you deliver it to the brain via electrical pulses. So if it is one, you give a pulse; if it is zero, there is no pulse. Then you read out what this network produces in electrical pulses, do the reverse pathway, and reconstruct the letter. The Brainet does this 86 percent correctly; it could reconstruct 86 percent of letters we input.

Q: This is done using binary code?

A: Yes, we use a binary code to represent the letters and then we retrieve that. You can summarize the rat paper basically by saying we tested whether this group of rat brains could do simple computations and they could, and the performance was sometimes better than that of individual rats. That’s a big deal. It’s simple, but it’s a beginning. And of course it has never been done before.

Q: In the monkey paper it wasn’t the same. More monkeys didn’t necessarily mean more brain power?

A: No, we are not interested in that part in the monkeys. In the monkeys, we combined their brain activity because each monkey was doing a sub-task. We had a major task to move a virtual arm in 3 D space, right? We divided that 3 D task into 2 D sub-tasks. Each monkey was responsible for a subtask in 2 D. So the movements were X Y Z, but each monkey was controlling mentally only two dimensions. When you combined monkeys, you got a 3 D output.

So the basic questions we asked were: “Can we combine multiple brains to produce reliable outcomes using a brain-machine interface?” That is the reason we called it a shared brain-machine interface. I will explain why this will be important in the future. Imagine there is a patient who has Parkinson’s disease or epilepsy or is paralyzed. To get this patient into a neuro-rehab program, you need to train the patient, by using a brain-machine interface. The patient needs to learn how to use his or her brain activity to control a device that restores mobility, takes care of electrical seizures, improves the Parkinsonian tremor—or in stroke victims, produces language artificially.

Connecting brains of pros and patients

Well that training—we have learned, working with paraplegic patients for the last two years—the first stages of learning are difficult for patients. So we produced this brain-machine interface to combine the brain activity of rehab physical therapists, for example, with the patient’s brain, so that the physical therapists help the patient to get the early phases of training faster, in a more rewarding way. So all this may help accelerate the process of neuro-rehabilitation.

Q: These are patients who have already gone through this?

Q: These are patients who have already gone through this?

A: No these would be naïve patients, patients who are starting the training. The most critical phase of the training is the beginning. In humans you can do that in a non-invasive way, you can use an EEG for that.

Q: How would a brain-brain interface help a patient more than brain-machine interface?

A: After many years of being paralyzed, your brain literally forgets how to move paralyzed limbs. The concept of having legs, or moving legs, disappears from your mind. So to start training this patient, you would say, “Imagine moving your legs,” but the patient doesn’t even know what to do. He may try to imagine but nothing happens. The same is true for someone who got a stroke and cannot speak anymore. Because the part of the brain related to language is totally destroyed. You need to train the other hemisphere of the brain to start talking. Well imagine the guy cannot talk, but he has two physical therapists who can talk normally, who could produce brain activity that is related to vocalization normally. We could now combine the brain activity of these therapists with the patient’s brain, so that the brain of the patient brain is trained to learn again how to speak.

Q: Wouldn’t this be hard? Would the therapist have to be a very clear thinker?

A: Believe me we will be doing it.

Q: But the physical therapist doesn’t know exactly how it is that he moves his leg, right?

A: But he does, every day. By using feedback we will get the patient’s brain remembering, reintroduced to the concept of having legs. We have done that using a brain control exoskeleton, out soon in a paper. We even saw neurological recovery in this patient below the level of the lesion of the spinal cord: unique. This has never happened before to the degree we are reporting on.

Q: So by hooking a malfunctioning brain with a functional one, we may be both reviving old neurons, and instructing other neurons to take over for dead neurons, both?

A: Yes. The brain is plastic. What we need to do, in stroke, is accelerate adaptation, so patients can benefit.

Q: Would you say the coolest part is that it is so simple?

A: Yes.

Q: Very sci fi. What about the notion of conducting a mass Mr. Spock “mind meld” thing?

A: I like Mr. Spock but we are not there. The two of us have watched too many sci fi movies. There are things we will never communicate. We can improve conditions of individuals. But you are not going to be able to transmit your memories, your emotions, your high cognitive states. You are not going to be able to teach your kids a foreign language by brain-brain interface. I don’t think that will ever happen. But the concepts and technology may have beneficial impact.

All sorts of crazy things

Q: How about wiring 1000 human brains together to figure out world peace?

A: No, we cannot use digital algorithms to reduce that big a task to sub-tasks.

Q: Have you thought about something practical that 1000 brains could do?

A: Oh, absolutely, believe me I have thought about all these scenarios and more. But that I will keep for my book when it is published in a couple of years.

Q: What are you doing next?

A: All sorts of crazy things. Hopefully we are on the verge of improving the lives of people suffering from neurological disorders. That is my long term goal.